Working With AI Generated Images

Table of Contents

With the growing ubiquity of generative AI, artists and creators are increasing having to adapt to the demands of Grok, ChatGPT, or more dedicated image generating AI service. With that said, and in opposition to “prompt-artists”, artists and creatives will be acutely aware of AI’s limitations; while the most detailed prompt can be written, AI will still miss-the-mark to varying degrees, largely because it (obviously) doesn’t have the experience or artistic/creative dictionary to understand what’s being asked of it contextually. The workaround isn’t necessarily to ask Ai to (re)generate images outright, but to have it break renders down into core components that can be ‘collaged’ or ‘composited’ properly in an image editor.

The Initial Image Prompts

When using AI to generate images the workaround’s basic workflow isn’t necessarily to just have AI generate and regenerate the same image though differentially prompting incremental changes, having it chase a tail it cannot see, but instead to selectively iterate on what the generator does produce, getting it the render sections or isolated elements of the base image, to avoid iterative devolution. Below then is a simple text prompt. First is the default ‘style guide’ used by ChatGPT to define the ‘Chibi’ or anime style;

Create an image in a detailed anime aesthetic: expressive eyes, smooth cel-shaded coloring, and clean linework. Emphasize emotion and character presence, with a sense of motion or atmosphere typical of anime scenes…

Then the ‘cat’ prompt is added as an append behind the style guide;

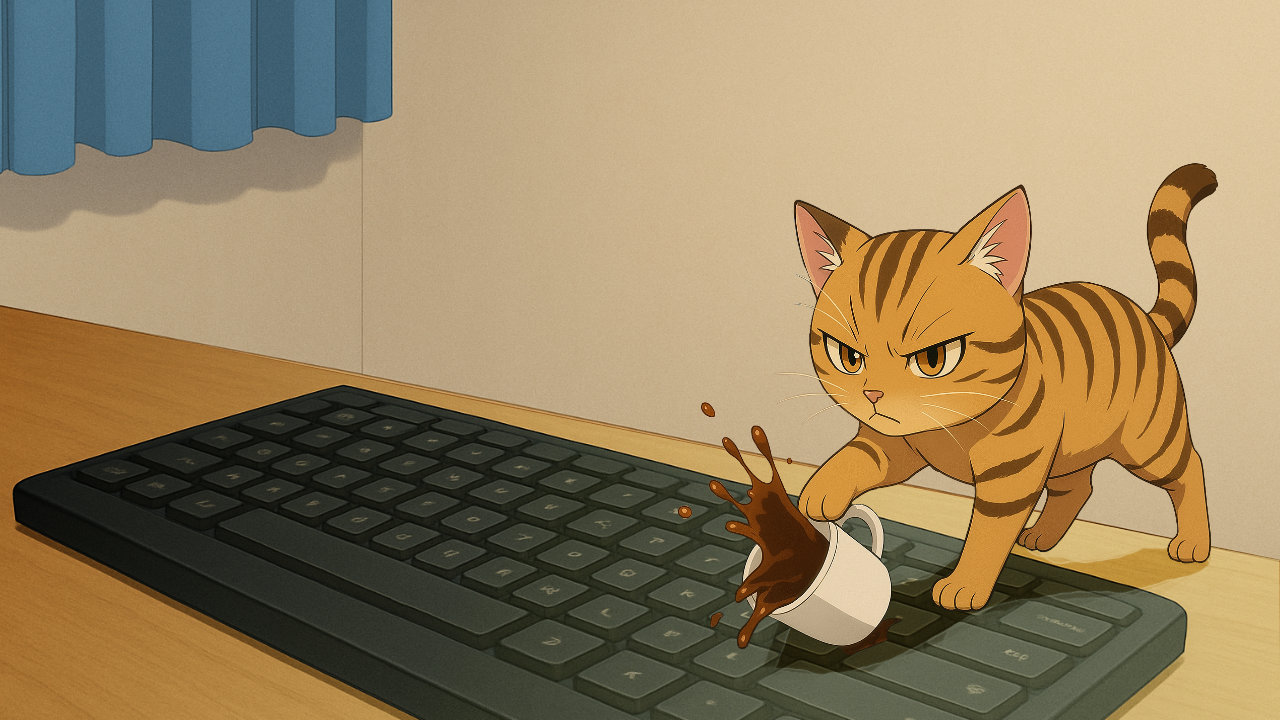

… of a tiger striped cat knocking over a mug of coffee as it walks nonchalantly across a black computer keyboard.

This results in the image below, that absent dimension information, defaulted to a ‘vertical’ or ‘mobile friendly’ format. It has the ‘tiger striped cat‘, the ‘knocked over coffee mug‘ the ‘computer keyboard‘ but doesn’t quite have the ‘nonchalant walk‘. It’s not exactly what was asked for, but in terms of a ‘creative’ image, the result is a ‘happy accident’ that can be worked with. So…

The initial prompt appended to the auto-generated ‘style guide’ results in a vertical image, friendly for mobile devices.

… while being thematically spot on, the first immediate modification needed was a dimension change, the image had to be re-rendered to essentially correct the orientation from vertical to horizontal, so the prompt was updated to;

Change the dimensions of the generated image to 1920 x 1080 pixels, change the background and other image elements to accommodate the updated images dimension.

While this addition maintained the theme and adjusted the aspect ratio, dimensions are service-limited to 1536 x 1024 pixels, and the cat is positioned noticeably centre-screen, which given its pose, is not compositionally balanced, but to be expected absent explicit placement instructions, but rather than do that, and potentially waste time rendering and re-rendering the scene a different approach is needed; rendering ‘layers‘.

The Chibi cat scene re-rendered with adjusted (but limited) dimensions, with cat noticeable centre-screen.

Generate Layers Not Images

Although AI image generators don’t explicitly render layered images, prompts can be made to that effect, individual elements of a scene can be rendered separately over monochromatic, single coloured backgrounds that can be later edited or masked out. Doing this means the overall ginger tiger-striped cat render can be broken broken down into a number of separate images for ‘compositing’ in an image editor. With this in mind, and using the same initial aspect-corrected but dimensionally locked parent image, the next prompt given was;

To render the background on its own (default 1536 x 1024 pixels locked dimensions still being used)…

Continuing with the same image style and dimensions, remove the cat, coffee mug and spilled coffee from the image above so only the background, desk top and computer keyboard are visible.

Having AI re-render the original image without the cat so only background elements are present.

And to render the cat and coffee mug on their own…

Render the cat and spilled coffee mug on their own over a plain white background.

Similarly asking the AI to re-render the scene excluding the background so only the cat and coffee mug are visible.

Image Compositing

Once ChatGPT (in this instance) has rendered the core elements of the original image separately, they can be saved (PNG) and imported into an image editor for masking, editing and compositing together, allowing for much greater control over size, placement and object relationships than an AI would be able to ever discern from a text prompt.

Design note: once images are rendered separately, depending on what they are, and how the elements are to be composited, they likely need to be masked because AI cannot (currently) mask objects. How this is done will vary depending of the image editor being used.

Compositing the separate renders together to produce the picture wanted.

Image Components

The greater advantage of getting AI to render separate images for (re)composition, is that any of the separate images can be modified in-of-themselves, for example having the cat re-rendered exactly as is, but playing with a Christmas bauble decoration instead of the coffee mug, thus allowing for a degree of simple customisation. The prompt to do something like that might then be;

Render the same image, the cat in the exact same pose, but replace the coffee mug and spilled coffee with a Christmas bauble decoration.

Re-rendering the cat to hold a Christmas-tree bauble as an themed alternative of the cat.

Once alternative are available they too, can be edited and ‘composited’ for similar effect. Similarly, each image ‘layer’ can be edited to accommodate better ‘characterisations’, i.e. better eyes, mouth etc., all pulled together to generate an composition the image generating AI would never manage.

Re-rendering the cat so core elements can be thematically swapped out.